Neural Network Noise Cleaning

Convolutional and compound neural networks for nonlinear noise mitigation, data quality improvement, and real-time pre-merger early warning.

Research area

Below ~60 Hz, LIGO’s sensitivity is limited not by fundamental quantum noise but by control noise — auxiliary feedback loops that keep the interferometer locked inadvertently inject disturbances into the gravitational-wave readout. These couplings are often nonlinear and non-stationary, making them invisible to traditional linear subtraction techniques. We use neural networks to learn and remove these noise sources directly from detector data.

DeepClean

Our foundational framework, DeepClean, trains deep neural networks to map auxiliary sensor channels to the noise they inject into the gravitational-wave readout. Unlike Wiener filters, DeepClean captures nonlinear and non-stationary couplings — the dominant noise mechanisms at low frequencies. Applied to LIGO data, it enhances the signal-to-noise ratio of detected events by ~22% and recovers consistent signal parameters (Ormiston, Nguyen, Coughlin & Adhikari, 2020).

Physics-informed CNN architecture

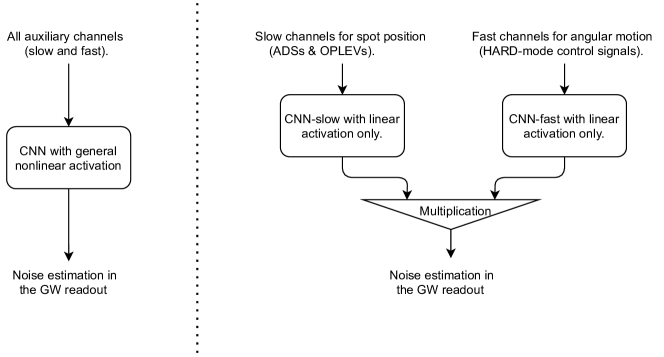

Building on DeepClean, we developed a convolutional neural network with explicit physics-informed structure. The key insight is that low-frequency control noise couples bilinearly: slow angular drifts modulate fast oscillatory signals. Our “slow × fast” architecture separates the CNN into two branches — one for slow channels (beam spot monitors), one for fast channels (angular control signals) — and multiplies their outputs. This structured approach outperforms generic CNNs and enables curriculum learning strategies that handle non-stationary noise (Yu & Adhikari, 2022).

Early warning with compound neural networks

The same noise-cleaning framework enables a qualitatively new capability: pre-merger early warning. By reducing low-frequency noise in real time, compound neural networks can detect coalescing binary neutron stars hundreds of seconds before merger at 40 Mpc, and tens of seconds at 160 Mpc. This gives electromagnetic telescopes enough lead time to slew and capture the first moments of a kilonova — a capability that conventional pipelines operating on uncleaned data cannot achieve (Yu, Adhikari, Magee, Sachdev & Chen, 2021).

Open questions

- Real-time deployment: DeepClean and its successors currently run on recorded data. Deploying neural network noise subtraction in real time — with latency low enough for early-warning alerts — requires inference times under ~100 ms on streaming data. What hardware and software architectures make this feasible?

- Generalization across observing runs: Noise couplings change between observing runs as hardware is modified. How quickly can models be retrained or adapted when the detector configuration changes, and can transfer learning reduce the retraining burden?

- Next noise sources: Below ~30 Hz, after control noise is subtracted, what noise sources remain dominant? Newtonian noise, scattered light, and suspension thermal noise each require different mitigation strategies — can neural networks help identify and subtract these as well?

- Validation and trust: Gravitational-wave science requires confidence that signal subtraction doesn’t distort astrophysical signals. What validation frameworks ensure neural network cleaning preserves signal integrity across the parameter space of compact binary mergers?