Digital-Twin Diagnostics & Forecasting

High-fidelity simulation models of gravitational-wave detectors that enable training ML controllers, accelerating commissioning, and predicting failures before they impact observations.

Research area

A gravitational-wave interferometer has thousands of coupled degrees of freedom — optical, mechanical, thermal, electronic — and its behavior changes hour to hour as alignment drifts, seismic conditions shift, and control loops interact. Commissioning a new detector (or recovering from a hardware change) requires painstaking manual diagnosis. Digital twins — high-fidelity simulation models of the full instrument — can compress this process by letting us test hypotheses, train controllers, and predict failures in silico before touching the real machine.

SimPlant

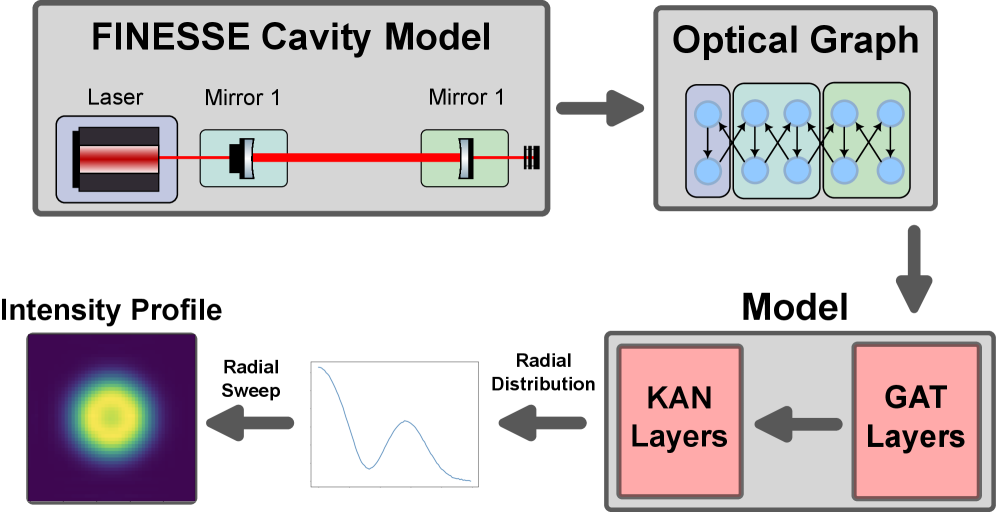

Our digital-twin framework, SimPlant, assembles a complete interferometer simulation from specialized physics engines:

- Optical fields: Finesse — the standard tool for modeling coupled optical cavities — computes the steady-state and frequency-dependent response of the interferometer, including higher-order spatial modes, mirror figure errors, and radiation pressure effects. SimPlant wraps Finesse to enable time-domain simulation of field evolution during lock acquisition and transient events.

- Mechanical suspensions: Multi-stage pendulum models (quadruple suspensions for test masses, triple suspensions for auxiliary optics) with the measured mechanical transfer functions and cross-couplings used at the LIGO sites. Seismic input is injected at the top stage using measured ground motion spectra.

- Thermal transients: Finite-element thermal models track mirror heating from absorption, ring heater actuation, and CO₂ laser compensation — the same physics modeled in the adaptive optics project. Thermal lenses evolve on minutes-to-hours timescales, creating slow drifts that challenge control loops.

- Feedback electronics: All major servo loops (DARM, CARM, MICH, PRCL, SRCL, angular alignment, intensity stabilization) are modeled using the actual digital filter coefficients deployed at the sites, exported from the LIGO CDS (Control and Data System) configuration files.

- Realistic noise injection: Seismic, thermal, shot, radiation pressure, and technical noise sources are injected with measured or modeled power spectral densities, producing synthetic data streams statistically consistent with real detector output at the channel level.

The result is a simulation that produces time-series data in the same format as the real detector — a critical requirement for training and validating ML systems that must eventually process real data.

The sim-to-real gap

The central challenge for any simulation-trained controller is the sim-to-real gap: discrepancies between the model and the real instrument that cause a controller optimized in simulation to underperform (or fail) on hardware. This problem is well-studied in robotics, where strategies include domain randomization (training on an ensemble of perturbed simulations), system identification (fitting model parameters to real data), and progressive transfer (fine-tuning on increasing amounts of real data).

For LIGO, the sim-to-real gap has specific physics origins: unmodeled mechanical resonances in the suspensions, frequency-dependent phase errors in the electronics, optical mode mismatch that changes with alignment, and non-stationary noise sources that the model doesn’t know about. The RL controller work addresses this by training in simulation with domain randomization (varying plant parameters over physically plausible ranges) and then fine-tuning on the real detector with conservative exploration policies. SimPlant must be accurate enough that the domain-randomized ensemble includes the real plant — but it doesn’t need to match perfectly.

Applications

Training ground for ML controllers. The reinforcement-learning controllers deployed at LIGO Livingston were first trained and validated in SimPlant before touching the real detector. The simulation provides a safe environment where RL agents can explore aggressive control strategies — including ones that would crash a real interferometer — without risk. Training in simulation is not just safer but also faster: SimPlant can run faster than real time, enabling millions of training episodes that would take years on hardware.

Commissioning acceleration for LIGO India. A new interferometer takes years to commission from first light to design sensitivity. The process involves: measuring the alignment sensing matrix, locking each cavity degree of freedom sequentially, shaping loop gains to suppress noise without introducing instabilities, and hunting down unexpected noise couplings. A digital twin can predict the behavior of a newly assembled instrument at each commissioning stage, identify likely failure modes, and pre-optimize control parameters — potentially compressing years of commissioning into months. We are developing SimPlant models specifically for LIGO India using the as-built instrument parameters.

Predictive diagnostics. By comparing real-time detector data against the digital twin’s predictions, anomalies become visible as deviations from the model. If the real DARM spectrum suddenly develops excess noise at 60 Hz that the model doesn’t predict, the mismatch flags a new environmental coupling. If the alignment signals drift in a pattern consistent with a specific optic shifting, the twin can identify which mirror before a human operator would notice. This enables early detection of degrading components, drifting alignment, or emerging noise sources before they impact observing duty cycle.

Prototyping at the 40m. The Caltech 40m prototype interferometer serves as a testbed for digital twin validation. SimPlant models of the 40m can be compared directly against real data, providing a controlled environment to measure and improve model fidelity before scaling to the full LIGO detectors.

Open questions

- Model fidelity threshold: How accurately must a digital twin reproduce the real instrument for ML controllers trained in simulation to transfer successfully? Quantifying the required fidelity for each application (controller training, commissioning prediction, noise hunting) would guide where to invest modeling effort.

- Computational cost: Full-fidelity time-domain simulation of a km-scale interferometer with all control loops is computationally expensive — currently much slower than real time for broadband simulation. Reduced-order models (modal truncation, surrogate models trained on full simulations) could enable faster-than-real-time training, but at the cost of fidelity in edge cases.

- Adaptive twins: The real detector changes continuously (mirror contamination, electronics aging, alignment drift, seasonal temperature variations). The twin must track these changes — options include periodic recalibration from system identification measurements, continuous Bayesian parameter estimation from the data stream, or hybrid approaches that update different model components on different timescales.

- Generalization across detectors: Can a SimPlant model developed for LIGO Livingston transfer to LIGO Hanford or LIGO India with parameter adjustments, or do site-specific effects (local seismic environment, vacuum system differences, electronics variations) require building each twin from scratch?