Computational Experiment Design

Using optimization and computational search to design experiments that maximize sensitivity to quantum-gravity signatures, systematically exploring interferometer topologies and measurement protocols.

Gallery

Research area

Designing an experiment to test quantum gravity is not just a hardware challenge — it’s an optimization problem. The space of possible interferometer configurations, measurement protocols, and readout strategies is vast, and human intuition alone cannot guarantee that the best experimental design has been found. We use computational search and optimization techniques to systematically explore this design space, identifying experimental setups that maximize sensitivity to specific quantum-gravity signatures while remaining technically feasible.

Why computation matters here

Traditional experimental design in physics relies on physical intuition: a theorist proposes a signal, an experimentalist designs an apparatus to look for it. But quantum-gravity signals are so weak — and the design space so large — that this approach may miss opportunities. Consider the choices involved in designing a single interferometer-based test:

- Topology: Michelson, Sagnac, Mach-Zehnder, or hybrid configurations, each with different sensitivity profiles

- Readout: Homodyne, heterodyne, photon counting, or adaptive measurement — each optimal for different signal types

- Quantum state: Coherent light, squeezed vacuum, entangled states, or non-Gaussian states — each with different noise properties

- Mechanical elements: Mass, geometry, suspension, temperature — each affecting the coupling to gravitational effects

The combinatorial explosion means that even experienced experimentalists explore only a tiny fraction of the possibilities. Computational methods can search systematically.

Fisher information as a design metric

The Fisher information matrix provides a rigorous framework for experiment optimization. For a parameter of interest θ (say, the amplitude of holographic noise or the decoherence rate from a specific quantum-gravity model), the Fisher information F(θ) quantifies how much information a given measurement extracts about θ. The Cramér-Rao bound guarantees that no unbiased estimator can achieve variance better than 1/F(θ).

This turns experiment design into an optimization problem with a clear objective: maximize F(θ) over the space of experimental configurations, subject to feasibility constraints. For an interferometer, F(θ) depends on the topology, readout scheme, quantum state of the input light, and the noise budget — all of which can be parameterized and optimized numerically.

The power of this approach is that it separates the physics question (“what signature does this quantum-gravity model predict?”) from the engineering question (“what apparatus is most sensitive to that signature?”). Different QG models — holographic noise, gravitational decoherence, modified commutation relations — predict different signal spectra and correlation structures, leading to different optimal experiment designs.

Example: optimizing for holographic noise vs. gravitational decoherence

Consider two quantum-gravity signatures that the tabletop tests program aims to probe:

Holographic noise (as targeted by GQuEST) predicts correlated displacement fluctuations between overlapping interferometer beams, with a spectral shape determined by the holographic correlation function. The optimal experiment for detecting this signal maximizes the cross-spectral density between two co-located interferometers while minimizing correlated technical noise. Key design parameters: beam overlap geometry, arm length, laser power, and the cross-correlation integration time. Computational optimization reveals that the sensitivity depends strongly on the relative orientation and position of the two interferometers — a parameter space too large to explore by hand.

Gravitational decoherence predicts loss of quantum coherence in massive superposition states, with a decoherence rate that depends on the mass, superposition size, and spatial geometry. The optimal experiment for measuring this effect maximizes the coherence time of a macroscopic quantum state while minimizing environmental decoherence. Key design parameters: test mass, temperature, vibration isolation, and the measurement scheme for witnessing coherence. Here the optimization landscape is qualitatively different — dominated by noise budgets rather than signal geometry.

These two examples show that different physics targets lead to fundamentally different optimal configurations, even when the underlying technology (precision interferometry, quantum-limited measurement) is the same. Computational search is essential because human intuition about one target doesn’t transfer to the other.

Connection to generative optical design

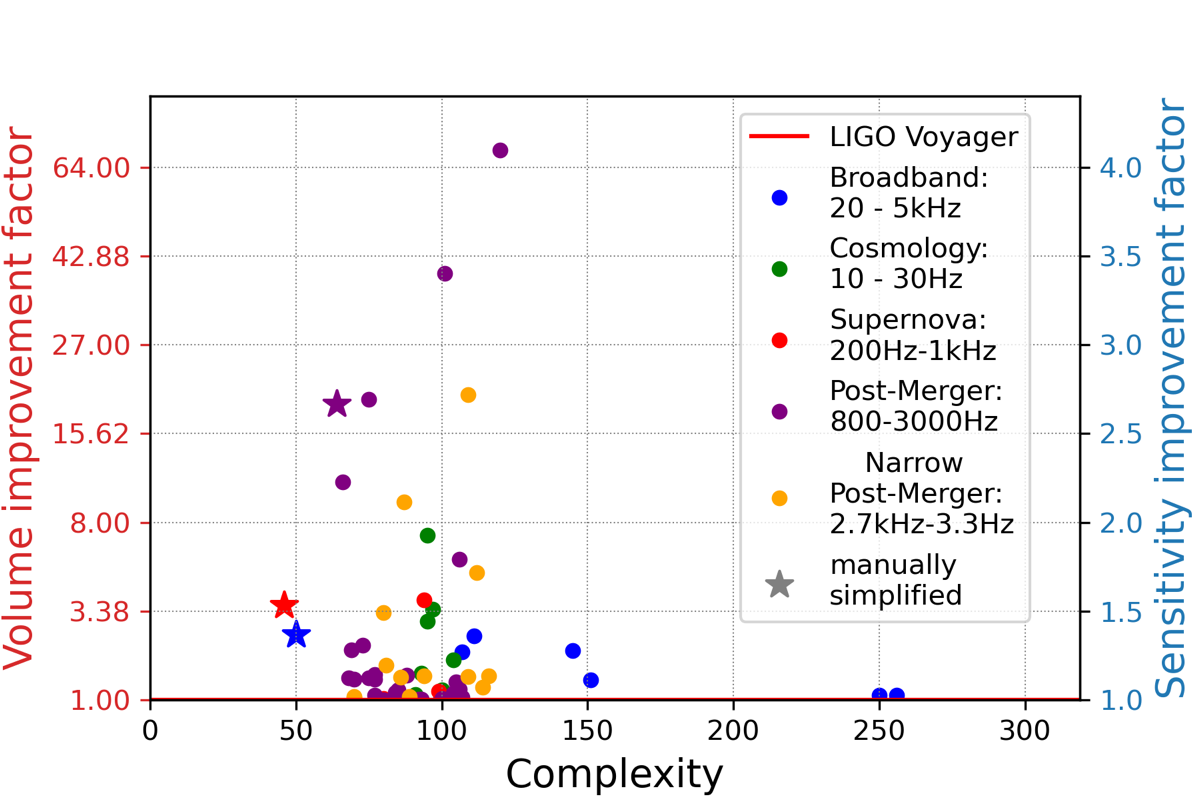

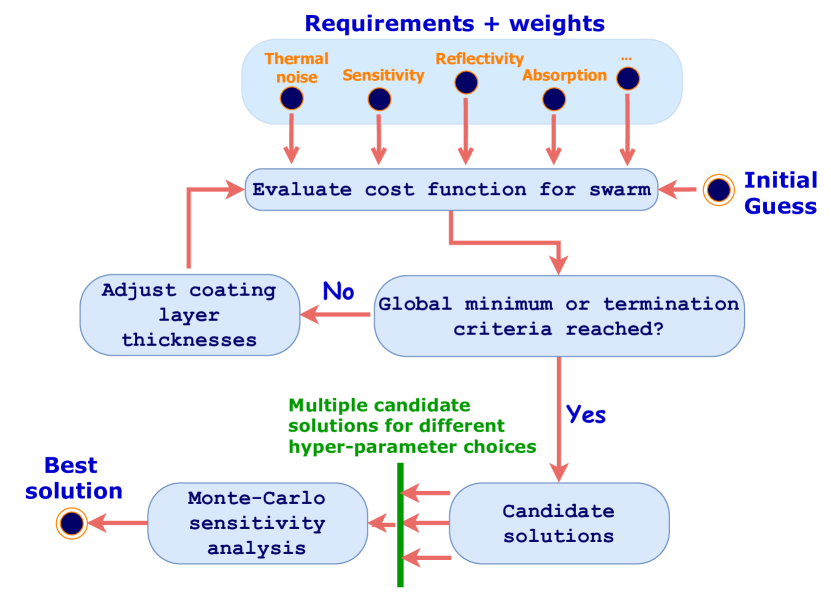

This work shares methodology with the Generative Optical Design project in the AI pillar, which uses gradient-based optimization (the Urania framework) to discover novel gravitational-wave detector topologies. The key difference is the target: generative optical design optimizes for astrophysical sensitivity (detecting mergers, neutron stars), while computational experiment design optimizes for quantum-gravity signatures.

The Urania framework’s core capability — differentiable simulation of arbitrary interferometer topologies — is directly applicable here. By replacing the astrophysical sensitivity objective with a Fisher information objective for a quantum-gravity signal, the same gradient-based search can explore the space of interferometer configurations optimized for fundamental physics. The technical tools overlap, but the objective functions are fundamentally different: detecting a black hole merger requires broadband strain sensitivity; detecting spacetime granularity may require optimizing cross-correlations, specific frequency dependencies, or non-classical measurement bases.

Open questions

- Objective function: What should you optimize for? Different quantum-gravity models predict different signatures. Is there a model-independent figure of merit — perhaps based on the volume of excluded parameter space — or must each experiment be designed for a specific theory? Multi-model optimization that maximizes sensitivity across a portfolio of QG models would be more robust but potentially less sensitive to any single model.

- Feasibility constraints: Computational search can propose exotic configurations that are theoretically optimal but practically impossible. Encoding real-world constraints (available laser power, achievable vacuum levels, detector noise budgets, budget) into the optimization is essential but risks over-constraining the search. Pareto-optimal frontiers — trading sensitivity against cost and complexity — provide a richer basis for decision-making than single-objective optima.

- Verification: If an algorithm proposes a novel experimental design, how do you verify that it actually has the claimed sensitivity? Independent simulation, analytic cross-checks, and prototype experiments at the Caltech 40m lab all play a role.

- From optimization to discovery: The most valuable outcome of computational experiment design may not be finding the optimal configuration for a known signal, but discovering that certain configurations are surprisingly sensitive to signals that weren’t the original target — serendipitous sensitivity. Systematic exploration of the design space can reveal unexpected connections between experimental parameters and physical observables.